Imagine a team relying on GPT-5 to draft sensitive reports or summarize confidential data.

Like, what happens if prompts are mishandled, outputs are inaccurate, or private information leaks?

These are real risks organizations face when adopting AI at scale, raising questions about access control, prompt safety, and employee awareness.

A recent Gartner report highlighted that nearly 40% of enterprises have integrated generative AI across multiple business units, yet less than 30% of leaders feel confident about the return on investment.

As AI becomes an integral part of workplace operations, addressing these concerns proactively is essential. Without proper safeguards, AI tools can inadvertently compromise data privacy, generate misleading information, or operate outside ethical boundaries.

To use AI effectively, businesses need strong security measures, clear usage policies, and ongoing employee training. Organizations can guarantee productivity in AI-driven workflows while upholding trust and compliance by putting these practices into place.

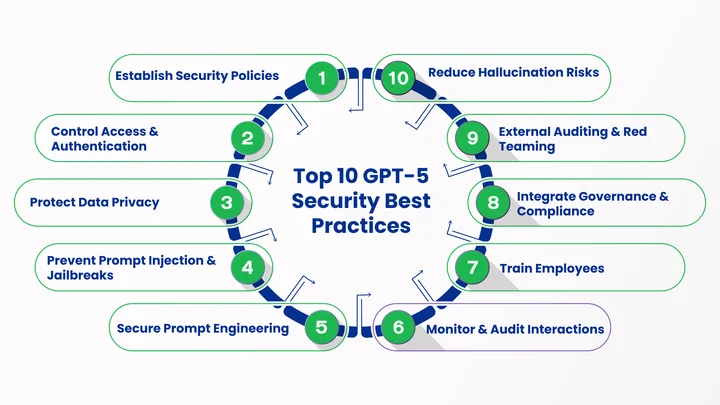

In this blog, we will explore the top 10 best practices for using GPT-5 securely at work in 2026.

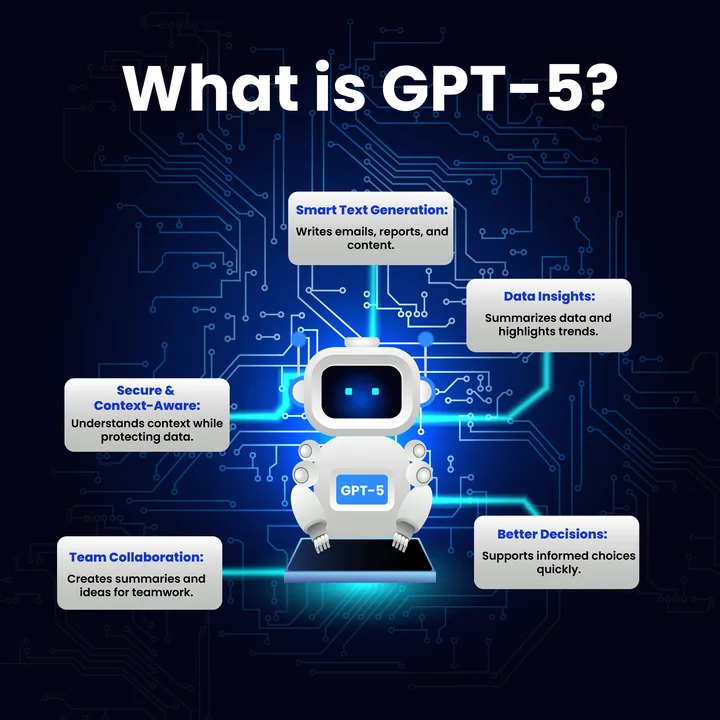

What is GPT-5 and How Does It Work in the Workplace?

Generative AI has altered how businesses operate, and GPT-5 is leading this transformation. Launched in GPT-5 2026, this advanced AI model goes beyond simple text generation; it can assist with:

Table of Contents

- Writing

- data analysis,

- Decision-making

- collaboration

In modern workplaces, GPT-5 serves as a productivity partner, helping teams work efficiently while maintaining compliance.

Unlike traditional tools, GPT-5 can understand context, summarize complex information, and even suggest creative solutions. Companies that adopt GPT-5 strategically gain a competitive edge, particularly when employees follow security measures and implement AI security best practices in 2026.

The real question for businesses isn’t just what GPT-5 can do, but how it can be used securely in everyday workplace operations.

In everyday business operations, GPT-5 acts as a versatile assistant that simplifies writing, data analysis, and collaboration. It enables teams to concentrate on strategic tasks while automating repetitive work by comprehending context and producing human-like responses.

Employees can leverage AI tips for faster writing and hacks for content creators to improve productivity, improve content quality, and maintain consistency across reports, emails, and presentations.

The AI also supports decision-making by summarizing data insights and offering actionable recommendations, all while following GPT-5 workplace guidelines and maintaining data privacy.

To put these benefits into action, teams can focus on key areas of application:

- Automating Repetitive Writing Tasks: Draft emails, reports, and documentation without exposing confidential information.

- Boosting Content Creation Productivity: Use AI safely to save time while maintaining compliance.

- Better Decision-Making: Use of AI to analyze datasets, summarize trends, and provide actionable insights.

- Supporting Collaboration and Communication: Generate meeting summaries, brainstorm content, and streamline team editing workflows.

- Maintaining Security and Compliance: ensure security compliance, implement prompt injection protection, and adhere to internal policy for AI tools.

Using GPT-5 in daily operations, businesses can achieve a balance of efficiency, creativity, and security, transforming the way teams work while minimizing risks.

Adopt GPT-5 the right way: secure workflows, reliable outputs, and measurable ROI.

With GPT-5 now becoming part of everyday workflows, following these 10 best practices can help teams maximize productivity and use AI securely in the workplace.

1. Establish Enterprise GPT-5 Security Policies

Establishing security policies is essential for safely integrating AI into workplace operations. These policies provide clear guidelines on how employees should access and use GPT-5, ensuring sensitive business data is protected and potential risks are minimized.

A strong policy framework should include access control for GPT-5 tools, prompt injection protection, rules for ethical AI usage policies, and protocols for monitoring AI outputs.

To implement these policies effectively, organizations should train teams on responsible AI practices, define internal policy for AI tools, and conduct regular audits to verify compliance.

Furthermore, by placing security at the core of its usage, companies can maximize productivity, reduce exposure to model jailbreak risks, and confidently leverage AI while maintaining regulatory and ethical standards.

2. Implement Control Access and Authentication for GPT-5 Tools

Controlling access to GPT-5 in 2026 is essential for keeping company data secure and ensuring AI tools are used responsibly. By limiting access to authorized employees, organizations can reduce the risk of misuse and maintain compliance with security standards.

The steps include:

- Role-based permissions: Assign access based on employee roles to ensure sensitive data is protected.

- Secure login protocols: Require strong passwords and periodic updates to prevent unauthorized use.

- Multi-factor authentication: Add an extra layer of security for all users of GPT-5 tools.

- Regular access reviews: Periodically audit and adjust user permissions as roles change.

- Monitoring usage: Track AI activity to ensure it aligns with internal policies and security best practices.

Organizations can protect data privacy with GPT-5 and guarantee the safe, responsible, and effective use of AI tools by putting strong access control and authentication in place.

3. Protect Data Privacy When Using GPT-5

Protecting data privacy is critical when integrating GPT-5 2026 into workplace operations. Since AI tools often process sensitive business information, there is a risk of exposing confidential data if proper safeguards are not in place. Therefore, organizations should implement strong encryption protocols and limit the type of data shared with GPT-5.

In addition, it is essential to ensure that all AI interactions comply with existing data protection regulations. Employees must be trained to recognize what information can safely be processed by the AI and to follow guidelines to avoid unintentional data leaks.

By doing so, companies can confidently use GPT-5 for better productivity while maintaining trust and security across the organization.

4. Prevent Prompt Injection and Model Jailbreak Risks

As organizations increasingly rely on GPT-5 for business tasks, it is essential to safeguard against prompt injection and model jailbreak risks. Prompt injection occurs when malicious or poorly constructed inputs trick the AI into producing unintended outputs.

Similarly, model jailbreak refers to attempts to bypass the AI’s safety protocols, which could expose sensitive data or generate harmful content.

To mitigate these risks, companies can take the following steps:

- Validate all prompts: Ensure input prompts are carefully reviewed to prevent malicious instructions.

- Limit sensitive data input: Avoid feeding confidential or critical business information directly into the AI.

- Red-teaming exercises: Regularly test AI responses to identify vulnerabilities before they are exploited.

- Follow GPT-5 workplace guidelines: Train employees to recognize unsafe prompts and ensure consistent safe usage.

- Monitor AI outputs: Continuously review AI-generated responses to detect anomalies or unexpected behavior.

By consciously taking these precautions, organizations can maintain secure and reliable AI operations while leveraging GPT-5’s full potential for productivity, content creation, and decision-making.

5. Secure Prompt Engineering for GPT-5 in the Workplace

Prompt engineering is the practice of crafting inputs that guide GPT-5 to produce accurate, safe, and relevant outputs. In the workplace, secure prompt engineering ensures that AI responses align with business objectives while minimizing the risk of exposing sensitive information.

Effective prompts reduce errors, improve consistency, and help employees get reliable results from GPT-5.

For example, a marketing team drafting an email campaign might use a prompt like, “Generate a professional client update email based on the following approved information: [insert non-confidential content]. Avoid including sensitive financial details or internal project codes.”

This approach ensures GPT-5 produces useful content without accidentally revealing private data. An organization can fully utilize AI while preserving data privacy, consistency, and operational safety by implementing workplace guidelines and secure, prompt engineering practices.

6. Monitor and Audit GPT-5 Interactions

To ensure GPT-5 is being used safely and effectively, organizations must continuously monitor and audit AI interactions. Regular monitoring allows teams to detect unusual behavior, prevent misuse, and ensure that AI outputs comply with GPT-5 security policies.

A practical approach involves logging AI queries and responses, reviewing outputs for accuracy, and checking for any sensitive information that may have been inadvertently included.

For instance, an HR department using GPT-5 to draft onboarding emails might review recent AI-generated messages weekly to ensure that no confidential employee data was exposed or misrepresented.

Businesses can maintain compliance with guidelines and obtain important insights into the practical application of AI by methodically auditing GPT-5 interactions. This ongoing review process helps improve prompt quality, better productivity, and reduces operational risks, making AI a safe and reliable tool in everyday business tasks.

7. Train Employees on GPT-5 Security Protocols

Employee training is a critical component of maintaining secure and effective use of GPT-5 2026 in the workplace. Even the most robust security policies are ineffective if employees are unaware of safe AI practices. Training ensures that teams understand potential risks, comply with security protocols, and use AI tools responsibly.

Key training focus areas include:

- Enterprise GPT-5 security policies: Access control, data privacy, and safe usage.

- Identifying risks: Prompt injection and unsafe inputs.

- Safe prompt engineering: Creating accurate and secure prompts.

- Data handling: Knowing what information can be shared with GPT-5.

In short, with proper training, employees can use GPT-5 confidently while minimizing security risks.

8. Integrate AI Governance and Compliance Standards

Integrating AI governance and compliance standards ensures that GPT-5 2026 is used responsibly across the organization. Businesses can match the use of AI with internal procedures and legal requirements by putting in place explicit policies and oversight.

This includes defining accountability for AI outputs, setting review processes, and regularly updating standards as regulations evolve. For companies looking to implement AI solutions effectively and align them with best practices, using AI and ML services can provide expert guidance and strategic support.

With proper governance, organizations can maximize the benefits of GPT-5 while keeping operations secure, compliant, and transparent.

9. Implement External Auditing and Red Teaming for GPT-5

Regular external auditing and red teaming help organizations identify vulnerabilities in how GPT-5 2026 is used. Audits provide an independent review of security, data privacy, and compliance, while red teaming simulates potential attacks or misuse to uncover weaknesses before they cause harm.

Businesses can strengthen AI safety, guarantee dependable performance, and proactively address risks by integrating these strategies with security. This continuous evaluation helps maintain trust and accountability in AI-driven operations.

10. Reduce Hallucination Risks and Ensure Safe Completions

Even advanced AI like GPT-5 can occasionally generate incorrect or misleading information, known as “hallucinations.” In the workplace, these errors can affect decision-making, content quality, and data integrity. Reducing hallucination risks and ensuring safe completions is therefore essential for maintaining reliability and trust in AI outputs.

To minimize risks, teams can follow these tips:

- Provide clear, specific prompts: The more precise the input, the less chance of errors.

- Cross-check AI outputs: Always verify information against trusted sources before use.

- Use guardrails and safety features: Leverage built-in OpenAI GPT-5 safety features to filter unsafe or inaccurate content.

- Iterative review: Review drafts or generated content multiple times to catch inconsistencies.

- Limit critical tasks: Avoid relying solely on AI for high-stakes decisions without human oversight.

Organizations can use GPT-5 to boost productivity, improve content quality, and protect data privacy while guaranteeing AI outputs are accurate and dependable by putting these strategies into practice.

Frequently Asked Questions (FAQs)

2. How Do Organizations Reduce the Risk of GPT-5 Hallucinations in Outputs?

To minimize hallucinations, provide clear and specific prompts, cross-check AI outputs against trusted sources, use GPT-5 safety features, review content iteratively, and avoid relying solely on AI for high-stakes decisions.

3. Can Expert Support Help in Implementing GPT-5 Securely at Work?

Yes, using AI Consulting or AI and ML services can guide businesses in implementing GPT-5 solutions safely, integrating them into workflows, and aligning AI use with compliance standards, ensuring maximum productivity and risk reduction.

Conclusion: Sustainable Practices for Secure GPT-5 Deployment in 2026

Implementing GPT-5 securely in the workplace doesn’t have to be overwhelming. Organizations can reduce risks like data leaks, prompt injection, and AI hallucinations by adhering to structured security policies, managing access, and providing employees with adequate training.

However, how can businesses guarantee that these procedures are followed uniformly by all teams?

Combining strong internal protocols with expert guidance can help implement GPT-5 solutions safely, integrate them seamlessly into workflows, and align AI use with compliance standards.

For example, AI Consulting services provide the support needed to maintain productivity and trust in AI-driven operations. Businesses can make GPT-5 a dependable productivity partner by implementing these sustainable practices and utilizing expert assistance.

As a result, it will improve collaboration, decision-making, and content production while keeping complete control over security and compliance.

Secure your workplace AI strategy with GPT-5: cut risks, protect data, and boost team productivity in just 30 days.

Share your thoughts about this blog!