Machine Learning (ML) has been the quiet workhorse of AI for years, handling structured tasks like classification and regression with ease. Models such as decision trees and support vector machines rely on labeled data and explicit feature engineering.

It enables them to be efficient for well-defined tasks across various industries, from fraud detection in finance to predictive analytics in healthcare. Large Language Models (LLMs), on the other hand, take things one step further.

These models are designed to understand and generate human-like text, processing massive amounts of unstructured data to perform tasks like text completion, summarization, and translation.

The “State of AI in 2024” study by McKinsey shows that AI is active in at least one operational area in 65% of organizations, and its adoption is accelerating across IT, marketing, sales, and service teams.

That said, traditional ML isn’t going anywhere. For structured data tasks, it’s still more efficient and less resource-heavy than LLMs.

So, when should a business stick with classic ML, and when is it worth adopting an LLM?

Comparing traditional machine learning vs. large language models helps answer this question.

In this blog, we will examine the differences between Large Language Models and traditional Machine Learning, their applications, and when to use each approach.

Table of Contents

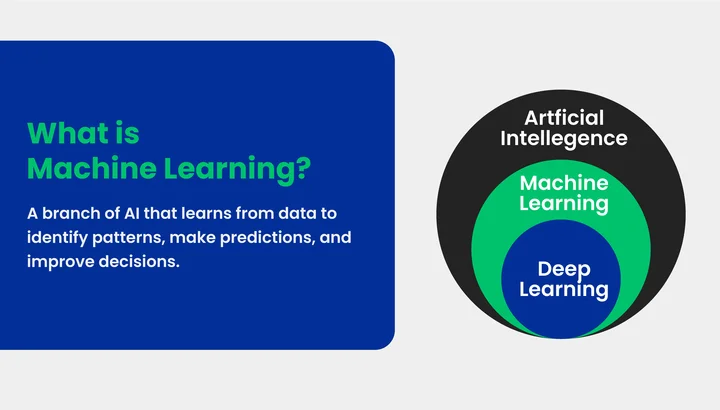

What is Machine Learning?

Traditional machine learning, often referred to as ML, is a branch of artificial intelligence that enables systems to learn from data and improve performance without explicit programming. It uses algorithms to identify patterns, make predictions, and assist in decision-making.

Notable aspects of traditional ML include:

- Algorithms and Models: Decision trees, support vector machines, linear regression, and more.

- Data Dependency: Requires structured data and clearly defined features for optimal results.

- Applications Across Industries: Used in areas like predicting customer behavior, detecting financial anomalies, and optimizing business operations.

Additionally, traditional ML forms the foundation for comparing large language models, helping to understand how LLMs differ from traditional ML models and excel in handling complex data tasks.

What is a Large Language Model (LLM)?

LLMs stand for large language models, which are advanced AI systems designed to understand, generate, and process human language. These models learn from massive amounts of text data to identify patterns, context, and nuances, making them far more capable than traditional ML models in handling complex language tasks.

Important characteristics of LLMs include:

- Contextual Understanding: They interpret sentences, paragraphs, and even entire documents with high accuracy.

- Generative Abilities: They can produce coherent text, summaries, responses, and even code.

- Versatility Across Applications: They perform well in translation, chatbots, content creation, and other NLP tasks.

Additionally, LLMs vs traditional ML algorithms demonstrate that LLMs excel in processing unstructured data, offering solutions that conventional machine learning models often cannot achieve.

Why Do LLMs Stand Apart from Traditional Machine Learning?

LLMs stand out because they can process and generate human language at a scale that traditional ML models cannot match. They learn from massive datasets, allowing them to understand context, intent, and nuances in ways conventional models often struggle with.

Notable features that make LLMs unique include:

- Understanding Context: They interpret meaning across sentences, paragraphs, and documents, rather than just focusing on individual data points.

- Generating Human-like Text: LLMs can create coherent responses, summaries, and content automatically, which is difficult for traditional ML algorithms.

- Handling Unstructured Data: Unlike most machine learning models, LLMs efficiently work with text, speech, and other unstructured formats.

- Versatility Across Tasks: They adapt to multiple tasks such as translation, question-answering, chatbots, and content generation without needing task-specific redesign.

Furthermore, the comparison of LLMs vs traditional ML highlights that LLMs offer more flexibility, deeper understanding, and better performance for natural language processing, making them essential in applications where understanding and generating human language is essential.

Still on Traditional ML? Leap ahead with LLMs to scale smarter and transform customer experience.

How Large Language Models are Different From Traditional ML?

Large language models (LLMs) can process vast amounts of unstructured text and understand context, intent, and subtle patterns, while traditional ML models rely on structured data and predefined features to make predictions. LLMs vs traditional ML algorithms show how LLMs handle language tasks more flexibly and effectively.

Unlike traditional ML, which requires task-specific adjustments, LLMs adapt to multiple applications such as chatbots, summarization, and translation without redesigning the model. Their ability to generate human-like text and understand natural language makes LLMs highly effective for NLP compared to traditional ML models.

Now we look at the important factors that differentiate LLMs from traditional ML models across various aspects.

| Factor | LLMs | Traditional ML |

|---|---|---|

| Purpose | To understand, generate, and interact with natural language | To predict outcomes, classify data, or find patterns |

| Data Type | Handles unstructured text, large datasets | Requires structured, well-defined data |

| Flexibility | Adapts to multiple tasks without redesign | Task-specific models are needed for each application |

| Context Understanding | Understands meaning, context, and nuances | Focuses on predefined patterns, limited context |

| Generative Ability | Can produce human-like text and summaries | Cannot generate text, only predicts outputs |

| Applications | NLP, chatbots, translation, and content generation | Classification, regression, clustering with structured data |

| Scalability | Learns from massive datasets efficiently | Limited by dataset size and structure |

| Training Complexity | Requires high computational resources | Lower computational requirements |

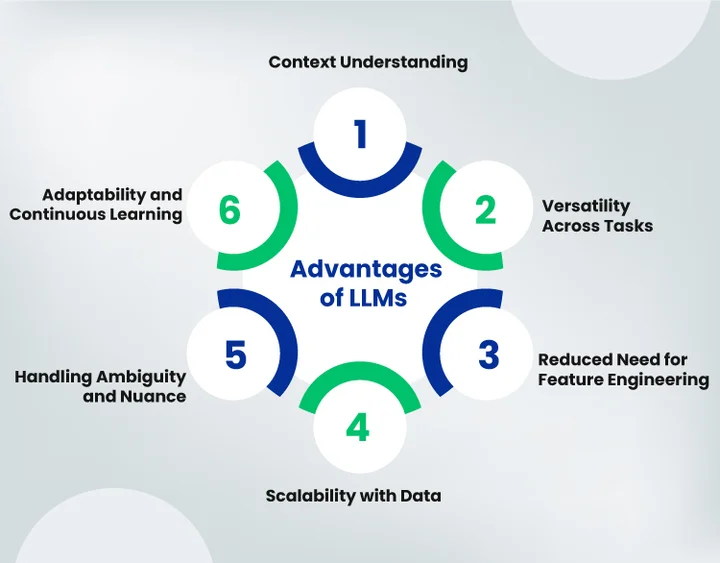

What are the Advantages LLMs Have Over Traditional ML?

Large Language Models (LLMs) provide several advantages over traditional machine learning techniques, especially when it comes to handling unstructured data, understanding context, and performing complex reasoning tasks. These advantages make them a powerful choice for modern AI applications:

- Context Understanding: Unlike traditional ML models that rely on predefined features and structured data, LLMs can interpret the context of text and generate human-like responses. This enables more accurate comprehension, summarization, and conversation.

- Versatility Across Tasks: A single LLM can perform a wide range of tasks, including translation, summarization, question-answering, content generation, and sentiment analysis. Traditional ML models usually require separate models for each task.

- Reduced Need for Feature Engineering: Traditional ML relies heavily on manual feature selection and preprocessing. LLMs, however, learn patterns and relationships directly from raw data, saving time and reducing human bias in feature design.

- Scalability with Data: LLMs can improve performance significantly when trained on larger datasets. In contrast, traditional ML models often plateau in accuracy as more data is added.

- Handling Ambiguity and Nuance: LLMs are capable of understanding subtleties in language, such as idioms, sarcasm, or context-specific meanings, which traditional ML models often struggle to interpret accurately.

- Adaptability and Continuous Learning: LLMs can be fine-tuned for new tasks or domains with relatively little additional data, offering flexibility that traditional ML models usually lack.

In summary, LLMs excel in situations where understanding, generating, and reasoning over complex or unstructured text is essential. Their ability to adapt, scale, and handle nuanced language gives them a clear edge over traditional machine learning approaches.

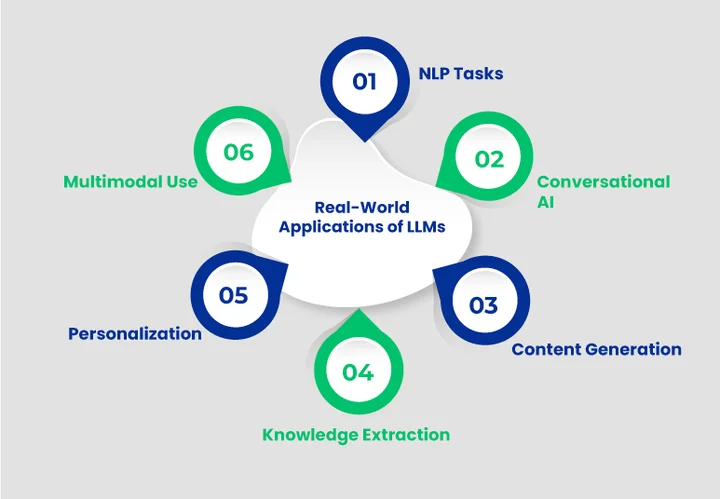

How are LLMs and Traditional Machine Learning Applied in the Real World?

Large Language Models (LLMs) are revolutionizing the way businesses and individuals interact with data, text, and information. Their ability to understand context, generate human-like language, and perform complex reasoning makes them ideal for a wide range of applications:

- Natural Language Processing (NLP) Tasks: From summarizing long documents to translating languages and analyzing sentiment, LLMs excel at tasks that require understanding the nuances of human language.

- Conversational AI and Chatbots: Virtual assistants and customer support bots powered by LLMs can carry on natural conversations, answer complex questions, and provide personalized guidance.

- Content Generation: LLMs can draft articles, reports, marketing copy, or even code, significantly reducing manual effort while maintaining quality and coherence.

- Knowledge Extraction and Research: By analyzing vast amounts of text, LLMs can extract insights, answer queries, and assist in research or data-driven decision-making.

- Personalization and Recommendations: Understanding user intent allows LLMs to deliver smarter content suggestions, product recommendations, and tailored communication.

- Multimodal Applications: When integrated with other data types like images or audio, LLMs support advanced reasoning and creative problem-solving beyond text-based tasks.

In short, LLMs are reshaping industries by making information more accessible, interactions more intelligent, and workflows more efficient. Their versatility and adaptability make them a cornerstone of modern AI solutions.

What are the Future Trends for LLMs?

Looking ahead, LLMs are becoming smaller, faster, and more efficient, while still maintaining their ability to understand and generate human-like text. Already, about 67% of organizations globally are using generative AI tools powered by LLMs in their operations.

Additionally, multimodal models that combine text with images, audio, or video are opening new possibilities across industries. Moreover, businesses are fine-tuning models for specific tasks, achieving better results with less data and lower computational costs.

At the same time, there is a growing focus on ethical AI, including bias mitigation and explainability, to ensure models are safe and reliable.

In short, these trends indicate that LLMs will continue to evolve, becoming more integral to industries and everyday applications.

Frequently Asked Questions (FAQs)

2. How Do LLMs Work Differently from Traditional ML Models?

LLMs are built with a broader scope in mind compared to traditional ML approaches.

- Traditional ML models: They are usually task-specific, meaning a separate model is trained for fraud detection, churn prediction, or recommendation.

- Large Language Models (LLMs): These are general-purpose and can adapt to many language-related tasks (such as translation, summarization, or chatbots) without retraining from scratch.

This makes LLMs more flexible and reduces the effort of developing multiple models for different use cases.

3. In What Applications Are LLMs More Effective Than Traditional ML?

LLMs are especially effective in natural language processing tasks such as chatbots, summarization, translation, and sentiment analysis. On the other hand, traditional ML performs better in structured tasks like predictions or fraud detection.

4. Can LLMS Replace Traditional Machine Learning Models?

Not entirely. While LLMs bring advanced capabilities for language-heavy use cases, traditional ML is still more efficient for structured data tasks such as forecasting and anomaly detection. Therefore, both approaches are often used together to achieve better results.

Conclusion

Large Language Models and traditional Machine Learning each have their strengths, and the choice between them depends on the type of data and task at hand. Traditional ML remains highly effective for structured data, providing efficient, resource-friendly solutions for classification, regression, and predictive analytics.

LLMs shine in handling unstructured information and driving advancements in areas closely tied to AI and ML services. LLMs, on the other hand, excel at handling unstructured data, understanding context, and generating human-like text, making them ideal for NLP tasks, content generation, and complex reasoning applications.

As AI adoption grows across industries, businesses can benefit from combining both approaches. Organizations can increase productivity, accuracy, and scalability by utilizing LLMs for language-intensive tasks and traditional machine learning (ML) for structured analytics.

Understanding the differences, applications, and limitations of each approach ensures smarter AI strategies and positions businesses to harness the full potential of modern artificial intelligence.

ML or LLMs? Don’t guess! Get a customized strategy to make the right call for your project.

Share your thoughts about this blog!