Developers everywhere are asking the same question: “Should I use LlamaIndex or a vector database?”

If you’ve been wondering the same thing, you’re in the right place. This post breaks it down in plain language.

Managing massive amounts of unstructured data isn’t easy. Getting retrieval-augmented generation (RAG) right or handling embedding storage efficiently can significantly impact your app’s performance. At the same time, vector databases are gaining popularity rapidly.

For instance, the 2025 Stanford AI Index Report reveals that private investment in generative AI reached $33.9 billion in 2024, marking an 18.7% increase from 2023 and over 8.5 times higher than 2022 levels. This demonstrates the critical importance of these technologies for developers seeking to build scalable and responsive AI systems.

With that in mind, understanding the differences between the LlamaIndex framework and vector database comparison options is crucial.

Curious which one can boost vector search latency and performance benchmarks or make integrating with large language models easier?

In this blog, we’ll demystify both technologies, compare their strengths, and show you how to combine them to power high-performance AI applications in 2026 and beyond.

Continue reading to find out more about this!

Table of Contents

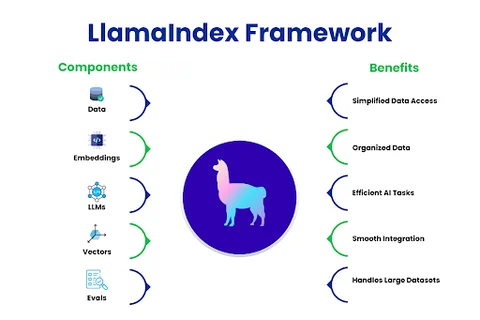

What Is the LlamaIndex Framework, and How Does It Help Developers?

AI projects often overwhelm developers with unstructured data, and connecting it to language models adds extra complexity. That’s where LlamaIndex comes in, helping developers organize and access data without extra headaches.

It works like a bridge between raw data and vector databases, making it easier to query and work with information from documents, APIs, or other sources. It keeps things clear and straightforward, allowing developers to focus on building more innovative AI applications.

So, how does it help developers in real-world projects? Let’s see why it makes working with AI data much easier.

- Simplifies data access across multiple sources, reducing manual work

- Keeps data organized for easier querying and updates

- Supports embedding generation, making AI tasks more efficient

- Integrates smoothly with vector databases, improving overall system performance

- Handles large datasets without creating confusion or bottlenecks

Struggling to manage unstructured data in your AI models? We design and deploy modern data pipelines (LlamaIndex + vector DB) so your models deliver faster, more accurate results.

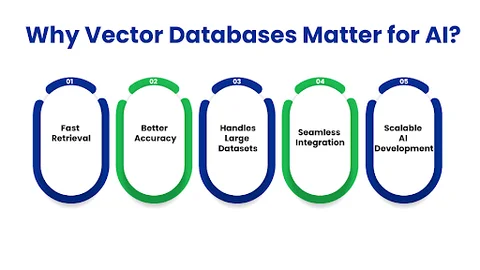

Why are Vector Databases Important for AI Applications?

Developers often struggle to find relevant information quickly when working with AI projects. A vector database is designed to store numerical representations of data, called “embeddings,” which capture the meaning of text, images, or other types of content.

Instead of searching for exact keywords, the database finds semantically similar items, making AI applications smarter and faster.

Here’s how vector databases help in practice:

- Fast data retrieval: quickly find relevant documents, text, or images without scanning everything manually

- Smooth integration: works well with frameworks like LlamaIndex to bridge raw data and large language models

- Improved query accuracy: retrieves information based on meaning, not just keywords

- Handles large datasets: supports millions of records without slowing down applications

- Supports embedding storage solutions: makes AI development more efficient and scalable

With vector databases, developers can focus on building smarter AI applications while keeping performance high and queries precise.

Next, we will compare LlamaIndex and vector databases side by side, showing how each manages and connects data for AI projects.

How Does LlamaIndex Differ from Traditional Vector Databases?

Developers often ask if they should rely on LlamaIndex, a framework for connecting large language models (LLMs) to data, or a vector database, which stores embeddings for fast retrieval.

Having a clear understanding of their differences can facilitate AI development and help in selecting the most suitable tool for a specific task.

To see how developers can distinguish these two frameworks and use them effectively, let’s look at a clear comparison:

| Aspect | LlamaIndex | Vector Database |

|---|---|---|

| Purpose | Organizes and connects raw data for LLM queries | Stores high-dimensional vectors for similarity search |

| Data Handling | Works with documents, APIs, and databases | Optimized for embeddings and numerical vectors |

| Query Type | Designed for retrieval-augmented generation (RAG) and LLM queries | Focuses on semantic search and nearest-neighbor queries |

| Integration | Bridges multiple data sources to LLMs | Connects easily with frameworks like LlamaIndex |

| Scalability | Handles structured access across datasets | Optimized for large-scale vector searches |

Also, developers notice that LlamaIndex makes it easier to organize and structure raw data, while vector databases handle fast, accurate retrieval. For example, when building a question-answering system, LlamaIndex prepares the data, and the vector database quickly finds the most relevant results.

Furthermore, using both together can make AI development workflows much smoother. LlamaIndex structures raw data and feeds it efficiently to advanced large language models, while a vector database ensures that retrieval speed remains high. This combination allows developers to build scalable, responsive AI applications without slowing down processing or creating bottlenecks.

Now that we’ve seen how LlamaIndex and vector databases differ, it becomes much easier to explore how they can work together in real-world AI projects.

Which Platform Fits Your Project Needs Better: LlamaIndex or a Vector Database?

Developers often face a common question: should they rely on LlamaIndex to connect their LLMs to data or use a vector database for fast embedding retrieval? The right choice depends on your project needs.

Significant Factors to Consider:

| Factor | LlamaIndex | Vector Database |

|---|---|---|

| Data Complexity | Handles unstructured/mixed data | Optimized for large-scale embeddings |

| Application Type | Knowledge retrieval, Q&A, LLM apps | Recommendations, search, RAG |

| Performance Needs | Some overhead due to LLM coordination | High-speed queries, scalable |

Using both together is often the most effective solution. LlamaIndex structures the data and provides context for the LLM, while the vector database ensures fast retrieval of relevant embeddings.

Example in Practice:

Imagine building a company’s knowledge base. LlamaIndex structures your documents so the LLM can interpret them meaningfully, while the vector database quickly retrieves the most relevant sections for any user query.

How to Integrate LlamaIndex with Vector Databases?

For projects that need both intelligent LLM reasoning and fast retrieval, integrating LlamaIndex with a vector database is straightforward:

1. Store embeddings in the vector database: Convert documents or data into embeddings and store them in the database.

2. Connect LlamaIndex to the database: Configure LlamaIndex to query the vector database whenever the LLM needs relevant context.

3. Retrieve and process data: When a user asks a question, LlamaIndex fetches the most relevant embeddings from the vector database and passes them to the LLM for reasoning and generating an answer.

4. Optional: Add caching or batching: For high-volume applications, caching frequent queries or batching requests can reduce latency.

LlamaIndex supports popular vector databases like Pinecone, FAISS, Weaviate, and Milvus, giving developers flexibility in choosing the most suitable option for their scale and performance needs.

This integration ensures fast retrieval and intelligent LLM reasoning, making your application both efficient and capable of understanding complex data.

Frequently Asked Questions (FAQ)

2. Can You Use Llamaindex Without a Vector Database (Or Vice Versa)?

Yes. You can run LlamaIndex independently for small or simple projects with low data volume. You can also use a vector database independently for semantic search without LLM context. However, for most production AI apps, combining both gives the best balance of organization and speed.

3. How Do LlamaIndex and Vector Databases Work Together in a Real Application?

Typically, your data is converted into embeddings and stored in a vector database. When a user query arrives, LlamaIndex retrieves the most relevant embeddings from the database and feeds them to the LLM, which then produces a contextual answer. This pipeline ensures both fast retrieval and accurate reasoning.

4. What Factors Should Developers Consider When Choosing Between the Two?

Key factors include:

- Data complexity (unstructured vs. purely embedding search)

- Performance needs (query latency, scale)

- Application type (knowledge retrieval, recommendations, RAG apps)

5. Are There Any Best Practices For Integrating Llamaindex With A Vector Database?

Yes. Some tips are:

- Pre-process and clean your data.

- Store embeddings in a scalable vector database.

- Optimize LlamaIndex queries (batching, caching).

- Monitor latency and memory usage.

Following these steps ensures high performance and smooth integration.

Final Takeaway: Smart AI Data Access with LlamaIndex & Vector Databases in 2026

Managing AI data efficiently can feel overwhelming, especially when dealing with unstructured documents, embeddings, and large-scale vector searches. By using LlamaIndex alongside a vector database, developers can naturally organize and retrieve relevant information without slowing down applications or overcomplicating workflows.

In addition to increasing query accuracy, this strategy ensures that your AI applications will remain scalable and responsive in 2026.

If your project involves integrating these tools into practical applications, working with a skilled Software Development team can help implement robust, production-ready solutions. Embedding storage and retrieval-augmented generation requires proper structuring and development practices to make AI systems smarter, faster, and more reliable.

This approach effectively addresses common pain points faced by developers today.

Frustrated with slow AI responses? Speed them up 10x with advanced LLM retrieval and vector search, keeping your apps lightning-fast, accurate, and scalable.

Share your thoughts about this blog!