You’re late for a meeting, your laptop is ready, and then… Boom, the car keys vanish.

You flip cushions, scan the table, and even peek in the fridge. Minutes wasted, frustration rising. Then you spot them sitting in plain sight on the counter.

That’s exactly how AI feels when it tries to find the right information. Your files, emails, and documents are all there, but they’re scattered like clutter in a messy drawer.

This is where LlamaIndex makes life easier. Think of it as that super-organized friend who shows up, tidies everything into folders, labels them, and says,

“Now you’ll always know where to look.”

And clearly, it’s not just me hyping it up; LlamaIndex reports over 4 million package downloads every month, which tells you how many developers already trust it. In 2026, it’s quickly becoming the go-to way for developers to build smarter AI apps without the chaos of unorganized data.

In this blog, we’ll walk through a quick LlamaIndex tutorial, what it really is, how it works, a simple step-by-step guide to using it, and some best practices.

Alright, enough small talk; let’s jump in and see what LlamaIndex is all about.

What is LlamaIndex?

So, what exactly is LlamaIndex?

Table of Contents

Think of it as the librarian your AI has always needed. You walk into a library with millions of books, but if they’re just tossed into piles on the floor, you’d never find what you’re looking for. That’s how most raw data feels to an AI: everything’s technically there, but completely unorganized.

LlamaIndex steps in and acts like the librarian who not only organizes the shelves but also knows exactly where to point you when you ask a question.

Instead of your AI hunting through a mountain of files, LlamaIndex gives it a neat index and a clear map.

The result?

Your AI can actually “remember” where things are and fetch the right answer without wasting time.

Among today’s AI indexing tools, developers are leaning toward LlamaIndex instead of traditional vector databases or clunky search methods. It’s all about helping AI understand the data in a way that feels natural, almost like a conversation.

And this brings us to the next big question:

How does LlamaIndex actually pull this off in real AI applications?

How Does LlamaIndex Work for AI Applications?

Picture walking into that same library, but this time you’re not just browsing; you’ve got a very specific question.

Instead of wasting hours flipping through random books, the librarian walks straight to the right shelf, pulls out the exact book, and even points to the page that answers your question. That’s how LlamaIndex helps your AI. It’s about making sure your AI can actually use that data when it matters.

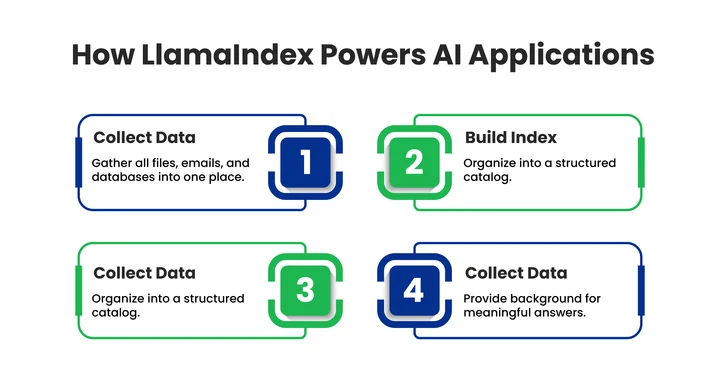

So if we turn that into real steps, it looks something like this:

- Ingesting the data: All your scattered files (PDFs, emails, and databases) get pulled into one place, so nothing slips through the cracks.

- Building the index: Instead of leaving things in messy piles, LlamaIndex creates a structured catalog your AI can navigate with ease.

- Querying with precision: When your AI asks a question, the “librarian” points it straight to the right shelf and page instead of making it search blindly.

- Providing context: LlamaIndex adds the background your AI needs so answers make sense in the bigger picture.

That’s why apps built with LlamaIndex give answers that feel sharp, relevant, and human-like.

So now that you know what’s happening behind the scenes, let’s roll up our sleeves and walk through how you can use LlamaIndex step by step.

How Can Developers Use LlamaIndex For Smarter AI Apps?

So now that we’ve talked about what LlamaIndex does, the next question is:

How do you actually use it?

The cool part is, it’s way simpler than it looks. Think of it like unboxing a new kitchen gadget you’ve been eyeing for weeks. At first, all the buttons, parts, and instructions feel a little overwhelming, but once you try it step by step, it suddenly makes sense. And before long, you’re using it every day, sometimes to make dinner quicker, and in this case, to build smarter AI apps without the headache.

Let’s break it down into simple steps so you can see how it all comes together:

1. Install And Set It Up

This is like taking your gadget out of the box and plugging it in. With LlamaIndex, that just means installing the package:

pip install llama-index

That’s it; your appliance is ready to go.

2. Connect Your Data Sources

A kitchen gadget is useless without ingredients. Same deal here. You’ll need to feed LlamaIndex your data, whether that’s PDFs, Google Drive docs, Notion notes, or even full databases. Once it has the “ingredients,” it can actually do something useful.

3. Build Your Index

This part is like the gadget’s setup mode. Once the data is in, LlamaIndex organizes it into an index so your AI knows exactly where to find things. Without it, your AI would just be pushing random buttons, hoping for the right outcome. With the index, it knows the recipe.

4. Ask Questions (Querying)

Here’s where the magic happens.

Think of it like loading ingredients into a blender and pressing the right setting; you don’t have to guess, and it gives you exactly what you want. In the same way, you ask a question in plain English, and LlamaIndex fetches the right answer straight from your data, without wandering around aimlessly.

Quick Python example:

from llama_index import VectorStoreIndex, SimpleDirectoryReader

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

response = query_engine.query("What are the key takeaways from the PDF?")

print(response)

5. Deploy It In Your App

Finally, the fun part: actually using it.

Just like once you’ve tested a new gadget with a trial recipe, you start making real meals; here’s where you plug LlamaIndex into your app. It could be a chatbot, a search assistant, or an internal tool. This is the step where it shifts from “demo mode” to powering something real.

Now that you’ve got a handle on how to get LlamaIndex up and running, let’s check out some tips to make sure it works smoothly and efficiently.

Overwhelmed by turning AI experiments into real apps? Turn early AI ideas into reliable and real-world applications.

What Practices Make LlamaIndex Work Best in AI Development?

Using LlamaIndex is exciting, but like any powerful tool, a little care goes a long way. Think of it like learning to drive a new car; you wouldn’t speed down the highway on your first day. A few smart habits will help your AI run faster, give more accurate answers, and stay reliable over time.

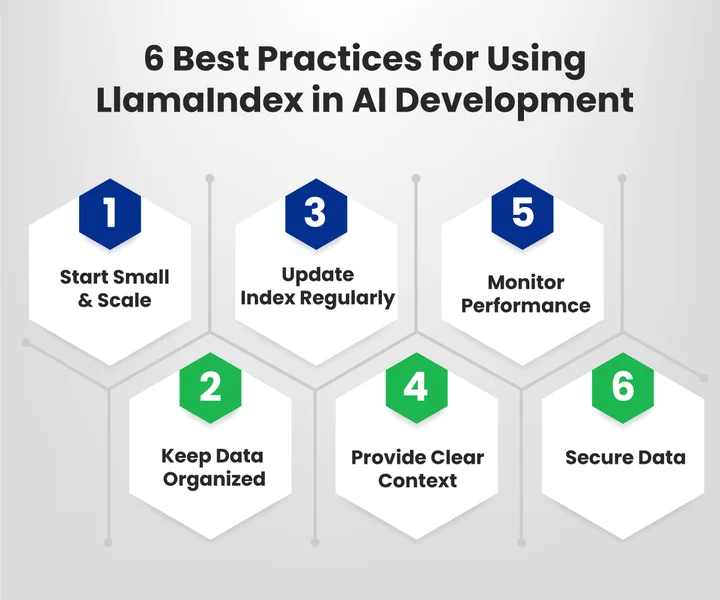

Here are some friendly tips to get the most out of LlamaIndex:

1. Start Small And Scale Gradually

Don’t overwhelm yourself by adding all your data at once. Begin with a small set, test your index, and gradually bring in more. This way, you can catch problems early and make sure everything runs smoothly.

2. Keep Your Data Organized

Messy data slows everything down. Make sure your files, spreadsheets, and documents are clearly labeled and structured before feeding them into LlamaIndex. When your data is tidy, your AI can find answers faster and more accurately.

3. Update Your Index Regularly

Data changes over time, and your AI needs the latest information. Refresh your index whenever you add or modify data to make sure your AI isn’t relying on outdated info.

4. Provide Clear Context

If you ask a friend a vague question like, “What’s going on?”, they might not know exactly what you mean. Your AI works the same way. Provide clear context in your queries so that it can deliver relevant, precise answers. Experiment with phrasing until you get consistent results.

Wrapping Up: LlamaIndex And The Future Of AI Applications

Remember that lost-keys moment we started with?

That’s exactly what LlamaIndex saves you from. Instead of your AI digging through piles of unorganized files, it knows exactly where to look, thanks to smarter AI data indexing that actually makes sense.

For developers, this framework helps you turn messy notes and databases into answers your apps can actually use. From chatbots and assistants to full-scale AI applications, LlamaIndex fits naturally into your workflow and works smoothly with LLM integrations you may already be building on.

And here’s the real win:

As AI keeps evolving, you won’t be stuck chasing trends. With LlamaIndex, your projects stay grounded, reliable, and a step ahead. Pairing it with trusted AI consulting services ensures those ideas move beyond experiments into scalable, real-world impact.

Think of it as the tool that turns “searching endlessly” into “finding instantly,” the kind of upgrade you’ll be glad you made today.

Frequently Asked Questions (FAQs)

2. How Do You Use LlamaIndex For AI Apps?

First, you set it up and connect your data sources, like PDFs or databases. Then it builds an index that your AI can easily search through. Finally, you can query it in natural language to get precise answers.

3. Is LlamaIndex Better Than A Vector Database?

A vector database stores embeddings but often lacks context. LlamaIndex adds structure and understanding on top of that. This means your AI doesn’t just retrieve information but can explain it in a meaningful way.

4. What Are Some Best Practices With LlamaIndex?

Keep your data clean and organized before indexing. Update your index regularly so your AI always works with the latest information. Clear, well-phrased queries also lead to better answers.

5. Can Developers Integrate LlamaIndex Into Real Apps?

Yes, LlamaIndex works smoothly inside chatbots, assistants, and enterprise tools. It helps connect raw data with language models in a natural way. Developers use it to build AI apps that feel sharp and human-like.

Struggling to get real value from LlamaIndex? With the correct LlamaIndex setup and data strategy, you can transform dispersed data into understandable and actionable insights.

Share your thoughts about this blog!