Modern applications rely on smooth communication between multiple services, and as a result, traffic control play a significant role in overall performance.

At the same time, different tools contribute in unique ways, so the right combination helps systems stay fast, secure, and reliable.

Meanwhile, the API gateway market continues to expand rapidly, growing from USD 4.38 in 2024 to more than USD 20B by 2033. This growth highlights how important these technologies have become for modern development teams.

As teams work through architectural decisions, a familiar challenge appears. Many want strong API-level control along with stable server-side performance. With clearer insight into how API Gateways and Load Balancers operate, this choice becomes far more manageable.

In this blog, we will explore the differences between API Gateways and Load Balancers, how each supports application performance, and when using both makes sense.

So, let’s get started!

What is an API Gateway, and Why Is It Essential for Modern Application Architecture?

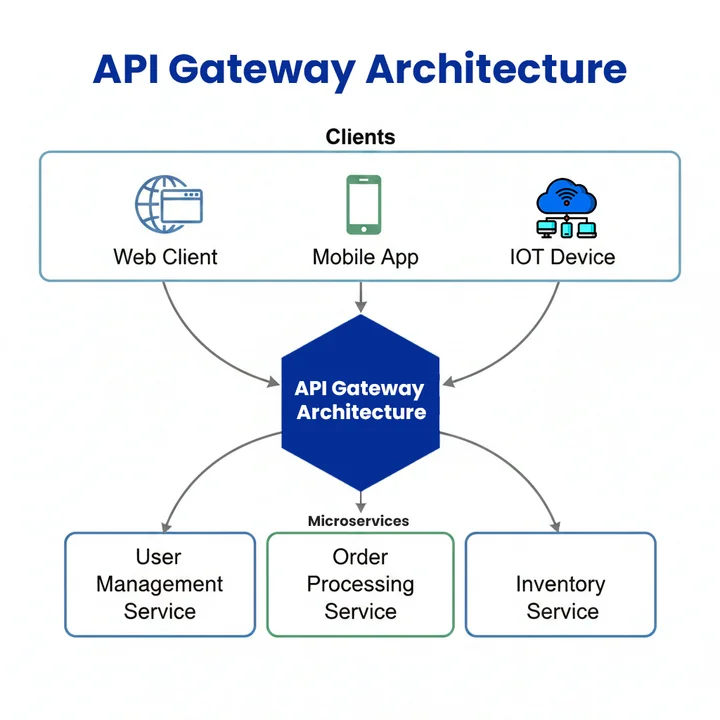

An API Gateway serves as the central entry point for all client requests in an application. It simplifies communication between clients and backend services, making it easier to manage multiple microservices.

In the comparison of microservices architecture API Gateway vs. Load Balancer, the gateway handles requests at the service level, providing control and efficiency.

Table of Contents

What are the Core Functions and Responsibilities?

The gateway performs several tasks to keep your applications running smoothly:

- Route requests to the correct backend service

- Translate protocols (HTTP to gRPC)

- Aggregate responses from multiple services

- Handle logging, monitoring, and caching

- Reduce service complexity so microservices can focus on their primary tasks

These functions allow backend services to operate without worrying about cross-service communication or extra overhead.

How to Handle Authentication and Rate Limiting in the API Gateway?

Security is a critical responsibility of the API Gateway. It manages who can access services and protects them from being overwhelmed. The security tasks include:

- Enforce authentication using API keys, OAuth tokens, or JWTs

- Protect services from overload with rate limiting

- Keep the system secure while remaining accessible to legitimate users

The system remains stable because security is managed centrally, allowing developers to concentrate on creating features rather than protecting individual endpoints.

Role in microservices architecture: In microservices, the API Gateway acts as the first point of contact, centralizing traffic, security, and monitoring. This makes microservices easier to scale, maintain, and keep robust under load.

What Is a Load Balancer and How It Maintains Server Performance and Reliability?

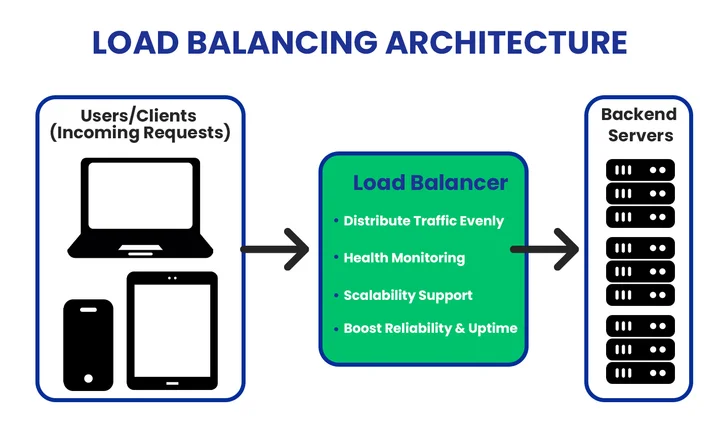

While API Gateways handle API-level control, Load Balancers focus on distributing traffic and maintaining server stability. They are essential for modern applications, especially in the context of Cloud & DevOps services.

A Load Balancer performs several critical tasks to keep systems running smoothly:

- Distribute client requests evenly across servers, keeping applications responsive during traffic spikes

- Detect unhealthy servers and automatically redirect traffic to healthy ones, reducing downtime

- Support scalability by adding or removing servers without disrupting users

- Improve system reliability and uptime, ensuring services remain available

These tasks let backend servers focus on processing requests without being overloaded, maintaining a smooth user experience.

Layer 4 vs. Layer 7 Load Balancer:

Load balancers operate at different layers of the network, each with unique capabilities:

Layer 4 (Transport Layer):

-

-

- Routes traffic based on IP addresses and ports. Fast and efficient, ideal for high-performance applications.

-

Layer 7 (Application Layer):

-

- Routes traffic based on application-level data like HTTP headers or URLs. Provides advanced control for more intelligent routing and content-based decisions.

The choice of layer determines how efficiently traffic is handled, keeping applications fast and reliable.

Strengthen traffic flow and boost application stability with guidance that supports your system architecture at every step.

API Gateway vs. Load Balancer Comparison: Differences and Responsibilities

API Gateways and Load Balancers manage incoming requests but play different roles. The gateway controls how APIs behave, while the load balancer spreads traffic across servers to keep systems stable. Both support the application, but each one focuses on a separate layer of the architecture.

For better understanding, the following is a brief comparison highlighting the differences between API Gateway and Load Balancer, their respective responsibilities, and where each is best suited.

| Aspect | API Gateway | Load Balancer |

|---|---|---|

| Best For | Microservices, API control, client simplification | Traffic distribution, server uptime, scaling |

| Client Handling | Single entry point for all clients | Distributes traffic only |

| Request Customization | Supports rewriting and versioning | No request modification |

| Response Handling | Can combine responses | Returns server response as-is |

| Policy Rules | API-level limits and controls | General balancing rules |

| Developer Support | Analytics and API tools | No developer tooling |

| Fault Isolation | Manages problematic API routes | Removes unhealthy servers |

| Latency | Can use caching | Depends on backend speed |

Looking at practical scenarios makes it clear how the API gateway manages API behavior, while the load balancer maintains a steady flow of traffic across servers.

What are the Practical Use Cases for API Gateways and Load Balancers?

API gateways and load balancers are essential components in modern application architecture. Each addresses different challenges, yet together they help build systems that are secure, scalable, and reliable.

When to Use API Gateway?

Looking at practical scenarios makes it clear how an API Gateway fits into your architecture. Here are its most common applications:

- Managing Microservices: Acts as a central entry point, simplifying communication between multiple backend services. Tools like AWS API Gateway and Kong are widely used for efficiently routing requests.

- Security Enforcers, such as Handles, authenticate, authorize, and efficiently limit access to individual services. Apigee by Google Cloud is commonly used for enterprise-level API security and monitoring.

- Client-Specific Responses: Adapts responses for web, mobile, and IoT clients, ensuring consistent performance across platforms.

- Response Aggregation: Combines data from multiple services into a single response, improving user experience.

Example Scenario: An eCommerce platform where both the mobile app and website require product details, pricing, and stock availability.

The API Gateway aggregates responses from multiple microservices, delivers a unified result, and enforces authentication and usage limits for thousands of concurrent users.

Similar approaches are used in industries that require secure and reliable API integration between multiple systems, ensuring consistent communication and data flow across services.

When to Use a Load Balancer?

While API Gateways handle API-level control, load balancers focus on distributing traffic and maintaining server stability. Here’s how they are commonly used:

- Traffic Distribution: Spreads requests evenly across servers to prevent overload. Services such as AWS Elastic Load Balancing (ELB) and NGINX are widely adopted in production environments.

- High Availability: Detects unhealthy servers and redirects traffic to maintain uptime.

- Scalability: Supports horizontal scaling by adding or removing servers without disruption.

- Global Traffic Management: Routes requests to the nearest server, reducing latency for worldwide users. Tools like Azure Load Balancer are used in cloud deployments to ensure performance and reliability.

Example Scenario: During a flash sale on the same e-commerce platform, traffic spikes dramatically. A Load Balancer distributes requests across multiple servers, preventing crashes and keeping the checkout process smooth for all users.

Organizations can maintain stable, secure, and high-performing applications by combining API Gateways for API-level management and Load Balancers for server-level distribution.

Frequently Asked Questions (FAQ)

2. Do You Need an API Gateway for a Small Application?

It depends on your architecture.

- For small apps with a single backend service, an API Gateway may be unnecessary overhead.

- If the application plans to scale into microservices or serve multiple clients, implementing an API Gateway early can save time later.

Implementing an API gateway early can save time later if the application plans to scale or serve multiple clients.

3. How Does a Load Balancer Improve Website Performance?

Load balancers improve performance by:

- Distributing traffic evenly across servers to prevent overload.

- Redirecting requests away from unhealthy servers to reduce downtime.

- Allowing horizontal scaling without affecting the user experience.

This ensures faster response times and reliable access even during traffic spikes.

4. Can an API Gateway Handle Security Alone?

Yes, an API Gateway can handle authentication, rate limiting, and IP filtering, but it is not a replacement for network-level security. For comprehensive protection:

- Combine it with a Load Balancer or firewall.

- Monitor services for unusual traffic patterns.

- Enforce security policies at both the API and server levels.

For complete protection, combine it with network-level controls and consistently monitor both API and server traffic.

5. Which Layer Should You Choose for My Load Balancer: Layer 4 or Layer 7?

For complete protection, combine it with network-level controls and consistently monitor both API and server traffic.

- Layer 4 Load Balancer: Routes traffic based on IP addresses and ports. Ideal for high-performance applications with simple routing needs.

- Layer 7 Load Balancer: Routes traffic based on application-level data such as headers and URLs. Offers advanced routing and content-based decisions.

The choice depends on traffic complexity and control requirements.

6. Are there any Free or Open-Source API Gateways and Load Balancers?

Yes, several open-source options exist for cost-effective solutions:

- API Gateways: Kong, Tyk, KrakenD

- Load Balancers: NGINX, HAProxy

These tools provide basic routing, security, and traffic management features suitable for startups or small projects.

Conclusion: Making the Right Choice for Your System Architecture

API Gateways and Load Balancers each serve distinct roles, yet together they keep modern applications running smoothly. Specifically:

- API Gateways: reduce traffic, enforce security, and simplify client interactions.

- Load Balancers: Maintain server stability, distribute requests efficiently, and ensure high availability.

Understanding how both fit into your architecture helps teams build systems that are reliable, scalable, and prepared for traffic spikes.

The Final takeaway: Using both tools strategically can save time, reduce risk, and improve overall performance.

For teams looking to scale applications or implement microservices, partnering with an experienced Backend Development expert can make integration seamless and keep your system stable while focusing on delivering features users love.

Slowdowns during traffic spikes? See which API Gateway or Load Balancer setup keeps your app fast and reliable.

Share your thoughts about this blog!