AI now guides enterprise decisions, such as hiring, credit scoring, and customer interactions. With more reliance on automation, risks such as bias, poor transparency, and weak governance are growing fast.

For instance, a financial firm’s AI-based loan approval system unintentionally favored certain applicant groups, leading to complaints and internal audits. Around 36% of organizations reported losses linked to AI bias, affecting both customers and operations. This shows why responsible AI practices are essential from the start.

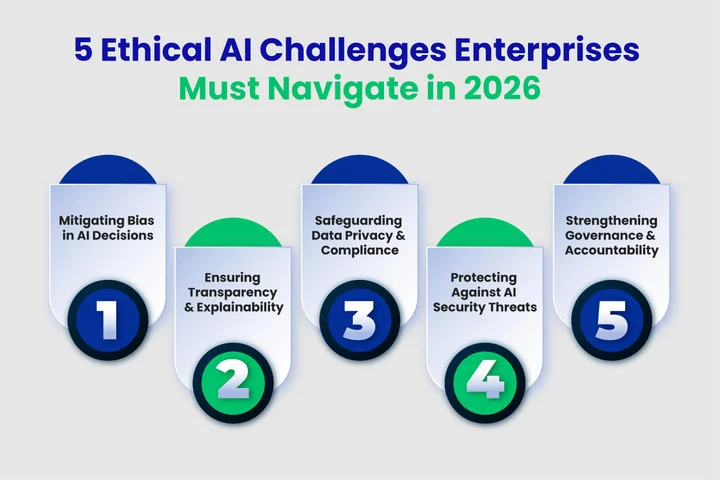

Ethical risks go beyond bias, including transparency gaps, data privacy concerns, security vulnerabilities, and governance issues.

In this blog, we’ll explore 5 critical ethical AI challenges enterprises must address in 2026 and discuss practical steps to turn potential pitfalls into opportunities.

Challenge 1: Bias and Fairness in Enterprise AI Systems

Bias arises when a model learns patterns that unfairly favor one group, thereby affecting trust and decision accuracy. This challenge grows sharper in 2026 as more enterprises rely on automated decision pipelines.

Have you ever seen how one unfair prediction can shift a significant decision?

Multiple industry findings highlight the impact of this problem. Additionally, 77% of companies that ran bias tests still found bias in their AI systems, underscoring the persistent nature of this challenge.

Table of Contents

Significant Reasons For Bias in Enterprise AI Systems Involve:

- Unbalanced or limited training datasets

- Missing diversity in model inputs

- Sparse human review during deployment

- Lack of structured audit cycles

Additionally, fairness gaps can also affect employee morale and customer trust, while compliance teams face growing pressure to meet transparency requirements.

How Can Enterprises Address Bias?

- Adopt AI risk management practices that include regular fairness audits

- Increase data diversity to reduce skew.

- Add human oversight to review high-impact decisions.

- Document model behavior as part of enterprise AI ethics guidelines

Real-world Example:

A loan-approval model may reduce approvals for certain groups if training data reflects past bias. Enterprises aiming to ensure fair and unbiased AI decisions can implement AI and ML solutions to improve model accuracy and fairness.

Challenge 2: Transparency and Explainability Requirements

As AI becomes embedded in day-to-day operations, teams need clarity on why systems make confident decisions. When models operate as closed “black boxes,” confusion grows and trust declines, especially in environments where decisions affect customers, finances, or compliance.

A recent IBM Global AI Adoption Index report shows that 40% of organizations struggle to explain AI-generated outputs to internal teams or clients. This gap slows decision-making and complicates risk management.

Main factors behind transparency challenges:

- Complex algorithms make it hard to interpret outcomes

- Limited documentation of model logic and training data

- Inconsistent reporting across teams and departments

- Insufficient tools for explainability and audit

Limited explainability not only affects confidence but can also invite regulatory pressure when automated decisions influence credit scoring, pricing, or customer rights.

Ways Enterprises Can Improve Transparency through:

- Maintain thorough documentation of data, models, and assumptions

- Implement explainable AI tools to clarify model decisions.

- Train staff to interpret AI outputs effectively

- Conduct regular reviews to ensure accountability in results

Example:

A credit scoring system may decline applications based on hidden features the AI considers essential.

Businesses ensure that decisions are comprehensible and defensible by using explainable AI tools and providing loan officers with summaries of key factors, fostering trust and reducing the likelihood of disputes.

Struggling with unclear AI decisions? Strengthen explainability to improve trust, accuracy, and confident outcomes.

Challenge 3: Data Privacy and Compliance Pressures in 2026

Enterprises rely on AI systems that handle sensitive personal and business data. With tighter regulations in 2026, privacy management has become critical. A single breach can damage credibility and trigger financial penalties.

Research shows that 59% of companies struggle to keep AI systems compliant with new privacy rules. The gap between AI adoption and regulatory readiness is getting wider.

Why Enterprises Struggle With Privacy And Compliance:

- Complex and rapidly changing data protection laws

- AI models pulling data from many different sources

- Limited visibility into how models store or reuse sensitive information

- Incomplete documentation of automated decisions

These challenges demand consistent oversight. Weak controls increase the risk of violations, brand damage, and operational issues.

How Can Enterprises Manage Privacy Risks?

- Use clear AI compliance strategies for businesses across teams

- Run regular audits to spot compliance gaps

- Encrypt sensitive information at every stage.

- Maintain transparent records of data usage

- Train teams on privacy rules and ethical data handling

For Example:

A healthcare company using AI to review patient records can face penalties if identifiable data leaks. Anonymizing details, securing storage, and auditing workflows ensure compliance and support responsible AI practices in 2026.

Challenge 4: AI Security Risks and Model Vulnerabilities

AI systems introduce new security gaps that many enterprises overlook. Models can be manipulated, poisoned, or exploited, sometimes without leaving evident traces. A single vulnerability can expose sensitive data or disrupt core business operations.

Recent data shows that 13% of organizations globally reported breaches of AI models or applications between March 2024 and February 2025. Among those, 97% lacked proper AI access controls.

Several Factors Contribute To AI Security Risks:

- Adversarial attacks that manipulate model inputs

- Weak authentication or access control for AI systems

- Poorly secured training and inference pipelines

- Lack of monitoring for unusual model behavior

Weak security can lead to data leaks, lost intellectual property, or operational disruption.

To Strengthen Security, Enterprises Should:

- Encrypt and secure AI model endpoints and APIs.

- Run regular adversarial testing and vulnerability assessments.

- Monitor model output and input patterns for anomalies.

- Limit access to training data and inference pipelines.

- Integrate AI-specific security controls into existing enterprise security frameworks.

- Update models and systems promptly to patch security gaps

For Instance:

A recommendation engine could be tricked into promoting specific products or leaking sensitive user data. Regular security assessments, strict access controls, and continuous monitoring reduce that risk.

Challenge 5: Governance, Accountability, and Policy Alignment

AI adoption continues to expand across departments, making governance one of the most significant pressure points for enterprises in 2026.

When policies are unclear or responsibilities overlap, teams face confusion, inconsistent decisions, and unnecessary delays. Many organizations still struggle to manage who controls what.

A Deloitte APAC Trustworthy AI Assessment found that 91 percent of organizations have only basic or still-developing AI governance for enterprises, highlighting the gap between adoption and mature governance.

Several Gaps Contribute To Governance Challenges:

- Responsibilities for AI decisions may be unclear.

- Policy documentation is often inconsistent or outdated.

- Ethical and compliance guidelines vary across teams.

- Oversight of AI performance, drift, and risk is limited.

These issues can create operational delays, compliance concerns, and ethical inconsistencies. However, structured governance helps teams stay aligned, improves accountability, and supports responsible AI use.

Steps That Support Stronger Governance:

- Assign clear ownership for AI projects and decisions.

- Develop formal AI ethics and governance guidelines.

- Conduct periodic audits to check compliance and policy alignment.

- Document model behavior, data use, and decision impact.

- Train teams on governance expectations and regulatory updates.

Real-world Example:

A financial firm using an AI risk model may see different outcomes when separate teams follow different review standards. With formal governance, documented workflows, and consistent accountability, the organization ensures fairness, compliance, and more predictable results.

Frequently Asked Questions (FAQs)

2. Does AI Bias Always Happen, Even If Companies Test Their Models?

Yes, bias can still appear, even after testing, because models learn from historical data.

Common reasons include:

- Training datasets that lack diversity

- Legacy information with hidden patterns

- Missing human oversight in deployment

- Poorly maintained audit cycles

Consequently, ongoing monitoring is essential to maintain the accuracy and reliability of systems.

3. How Can Enterprises Ensure Their AI Systems Stay Compliant With New Regulations?

Organizations can stay compliant by creating a structured privacy and governance process. Key steps include:

- Regular AI audits

- Clear documentation of model decisions

- Centralized data governance

- Updating teams on new regulations

These steps help enterprises align with GDPR, HIPAA, and emerging AI laws, such as the EU AI Act.

4. Are AI Security Risks Different From Traditional Cybersecurity Threats?

Yes, they differ significantly. AI introduces unique vulnerabilities because systems can be attacked through their data, model logic, or API endpoints. Unlike traditional systems, AI can be manipulated without directly breaching infrastructure.

5. What Happens If an Enterprise Has Weak AI Governance?

Weak governance can lead to multiple operational and ethical issues, including inconsistent decisions and compliance violations. Additionally, it can result in:

- Poor documentation of how models make decisions

- Lack of accountability

- Increased risk of bias or errors

- Difficulty proving compliance with regulators

Strong governance ensures every team follows the same ethical and operational standards.

Summary

Addressing ethical AI challenges in 2026 requires more than awareness. It demands structured action. Enterprises that proactively tackle bias, improve transparency, and ensure strong governance can protect trust, avoid compliance issues, and improve operational outcomes.

Additionally, responsible AI implementation starts with expert guidance and transparent processes. Using AI consulting Services or specialized support ensures systems are explainable, fair, and aligned with emerging regulations.

For teams planning generative AI projects, overlooking ethical considerations often leads to setbacks. Enterprises that integrate oversight, transparency, and responsible practices from the start can avoid challenges discussed in projects like Generative AI Project Failures, turning potential risks into opportunities for growth.

In the end, combining expert guidance, structured governance, and continuous monitoring ensures AI systems remain innovative, ethical, and fully aligned with enterprise goals.

Facing growing AI governance, compliance, and security challenges in 2026?

Share your thoughts about this blog!