Amazon has grown from an online bookseller into a global retail and cloud computing leader. In Q1 2025, AWS reported approximately $29 billion in revenue from its cloud computing and hosting services. Among its many services, AWS Lambda enables scalable serverless applications, where fine-tuning performance is essential for maximizing efficiency and controlling costs.

Lambda Performance Tuning involves several strategies and best practices to ensure optimal performance, which can directly impact user experience and application responsiveness. The primary focus is on achieving Lambda cold start optimization, refining memory allocation, reducing execution duration, and managing dependencies.

Performance tuning can also include optimizing the code itself, selecting appropriate runtime environments, and ensuring that external resources such as databases and APIs are accessed efficiently. By implementing the Lambda optimization techniques, you can achieve faster execution times, reduced costs, and improved scalability for your serverless performance.

In this blog, we will discuss the AWS Lambda best practices to optimize performance and secure peak efficiency for a Lambda deployment.

If you are just getting started or are already experienced, these AWS Lambda insights will help you maximize your application’s performance while getting benefits from AWS’s capabilities.

1.Select the Most Suitable Runtime

Choosing the right runtime environment is one of the basics of best practices for tuning AWS Lambda performance, that are important for Lambda cost and performance optimization and developer productivity. Lambda supports various runtimes, including Node.js, Python, Java, Go, and .NET, each offering unique advantages and trade-offs.

For instance, Node.js and Python are ideal for general-purpose serverless computing due to their rapid cold starts and excellent support for asynchronous operations. Their extensive ecosystems also simplify development through a wide array of libraries and frameworks.

Table of Contents

Java and .NET, on the other hand, excel in compute-intensive workloads, thanks to their powerful concurrency models and Lambda Function Optimization. However, these runtimes often experience longer cold start times, which may affect performance in latency-sensitive use cases.

How to Achieve?

To determine the best runtime, consider factors such as average execution duration, resource usage, scalability needs, and your team’s familiarity with the language. Benchmark your shortlisted runtimes under realistic workloads to make an informed decision.

2. Allocate the Right Amount of Memory

AWS Lambda allows memory allocation from 128 MB up to 10 GB. This directly influences the CPU power available to your function; higher memory results in more CPU, which often leads to better performance.

How to Achieve Memory Optimization?

- Performance Benchmarking: Test your function with varying memory configurations. Begin with a lower allocation and incrementally increase it while measuring changes in execution time and cost efficiency.

- Use CloudWatch Metrics: Monitor function performance via CloudWatch. Analyze memory usage, execution time, and billing metrics to identify if the function is under-provisioned or over-provisioned, and adjust accordingly.

- Balancing Cost and Speed: While more memory can boost performance, it also raises the price per invocation. Aim for a memory configuration that delivers optimal execution times without incurring avoidable expenses.

3. Reduce the Function Package Size

The deployment package size directly affects cold start duration and overall function efficiency. Keeping the package lean can significantly improve startup time.

How to Minimize Your Lambda Package?

- Trim Dependencies: Only include essential libraries and modules. Regularly audit your dependencies and remove any that are unused or redundant. Tools like Webpack or Parcel can help eliminate dead code through tree-shaking.

- Use Lambda Layers: Offload shared dependencies to Lambda layers. This reduces package duplication across functions and simplifies updates.

- Minify and Compress Code: Use minification tools like UglifyJS for JavaScript or Pyminifier for Python to shrink your source code.

- Exclude Development Files: Use package managers (e.g., npm, pip) with flags that omit development dependencies from your production builds.

4. Shrink Deployment Artifacts

Deployment artifacts encompass your code and all associated assets. Smaller artifacts accelerate deployment and reduce initialization latency.

How to Minimize Artifacts?

- Compress Before Uploading: Always zip your deployment packages. Compression reduces file size and shortens deployment times.

- Ignore Redundant Files: Use .npmignore, .gitignore, or .dockerignore to exclude files like tests, documentation, and local configurations.

- Optimize Static Assets: Compress images and other static files with tools like ImageMagick or TinyPNG to cut down file size without sacrificing quality.

- Consider Container Images: For larger and more complex setups, use Lambda container images. They support up to 10 GB and provide more control over runtime environments and dependencies.

5. Address Cold Start Latency

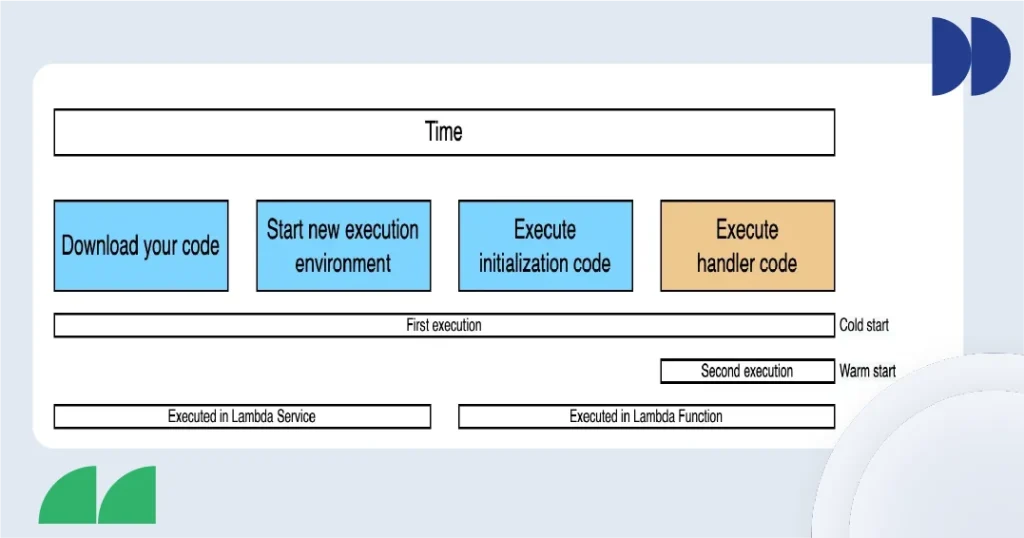

Cold starts occur when Lambda initializes a function for the first time or after a period of inactivity, leading to delays. Minimizing this latency is crucial for real-time or user-facing applications.

How to Achieve Lambda Cold Start Optimization?

- Provisioned Concurrency: Reserve a specific number of warm instances to ensure immediate availability. While this incurs extra cost, it guarantees consistent response times.

- Streamline Initialization: Keep your initialization logic lightweight. Limit the use of external libraries and perform heavy setup tasks outside of your Lambda function when possible.

- Keep Functions Warm: Use scheduled triggers via CloudWatch Events to invoke functions at regular intervals, helping them stay warm and responsive.

- Leverage Container Reuse: Design functions that benefit from Lambda’s container reuse feature. Store reusable data in memory during the container’s lifecycle to avoid reloading.

6. Set Timeouts Conservatively

Timeout settings dictate the maximum execution duration for Lambda functions, ranging from 1 second to 15 minutes. Mastering the Lambda resource management helps in choosing the right timeout, which is vital for both reliability and cost management.

How to Set Up a Timeout Configuration?

- Review Historical Data: Use CloudWatch to analyze previous execution durations. Set the timeout slightly above the average time to allow for fluctuation without risking unnecessary charges.

- Incorporate Robust Error Handling: Equip your function with retry logic and graceful error handling to handle transient issues or failures without prolonged execution.

- Adjust Dynamically: Monitor execution trends over time and adjust timeout values as workloads change. Consider adaptive strategies that fine-tune timeout limits based on real-time behavior.

7. Embrace Asynchronous Execution

Asynchronous invocations decouple Lambda functions from clients, allowing background processing and a more scalable AWS Lambda architecture. These are ideal for tasks that don’t require immediate responses.

How to implement Asynchronous Flows?

- Event-Based Triggers: Integrate services like SNS, SQS, or EventBridge to trigger Lambda functions asynchronously. These tools support resilient and loosely coupled event-driven serverless architecture.

- Set Up Dead Letter Queues (DLQs): Capture failed messages using DLQs for post-mortem analysis and reliable reprocessing. DLQs can be implemented via SQS or SNS.

- Monitoring and Alerts: Use CloudWatch to track success and failure rates of async invocations. Set up alerts to respond quickly to anomalies or spikes in failure rates.

- Custom Retry Logic: While AWS retries async invocations twice by default, additional custom logic can enhance reliability, especially in failure-prone workflows.

8. Use Batch Processing for Efficiency

Batch processing enables Lambda to handle multiple records in a single execution, optimizing both speed and cost.

How to Apply Batch Processing?

- Integrate with SQS or Kinesis: Both services can trigger Lambda functions with a batch of records. This is useful for processing log streams, transactions, or queued messages.

- Tweak Batch Sizes: Choose a batch size that maximizes throughput without exhausting memory or exceeding timeouts. Test different sizes to find the best fit for your workload.

- Handle Partial Failures: Build logic to process remaining records when some fail. DLQs help isolate and reprocess only the problematic messages.

- Parallelize Within Batches: Leverage threading or async programming within your function code to concurrently process items in a batch.

9. Introduce Smart Caching Strategies

Caching improves performance by reducing redundant data fetching, enhancing user experience, and lowering external API calls.

How to Use Effective Lambda Caching Methods?

- Lambda Extensions: Use extensions to store data locally across invocations, reducing repeated initialization or lookups.

- External Caching Services: Integrate with Amazon ElastiCache or DynamoDB Accelerator (DAX) for fast, in-memory access to frequently used data.

- In-Memory Caching: Store repetitive data in memory during execution using standard data structures. This is particularly effective in warmed containers.

- Invalidate Wisely: Implement cache invalidation policies, time-based expiry, event-driven triggers, or manual purges, to ensure data accuracy.

10. Design with Stateless and Ephemeral Principles

Stateless, ephemeral functions scale better and recover faster from failures. They are fundamental to building robust and serverless-native applications.

How to Achieve Stateless Design?

- Avoid Local State: Refrain from storing data on the Lambda file system or in-memory across invocations. Use services like S3, DynamoDB, or RDS for persistence.

- Leverage External State Management: Store session data and application state externally to enable horizontal scaling and improve resilience.

- Ensure Idempotency: Make sure your functions yield the same result regardless of how many times they’re invoked. This prevents errors from retries or duplicate events.

- Use Temporary Storage Judiciously: AWS services provide a directory for ephemeral storage. Use it only for short-lived, intermediate tasks that don’t require durability. If you’re implementing this in a production environment, consider using trusted AWS consulting expertise to ensure best practices are followed.

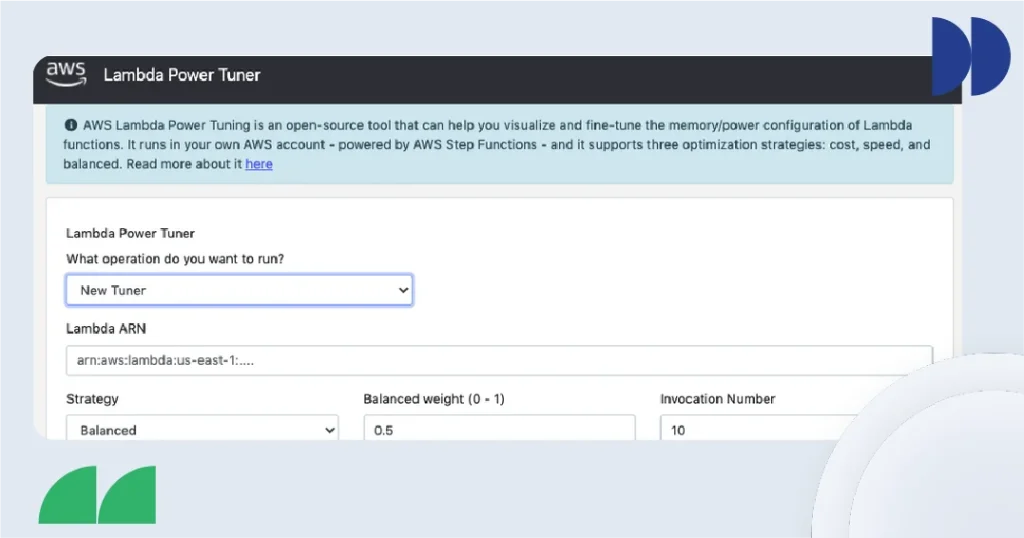

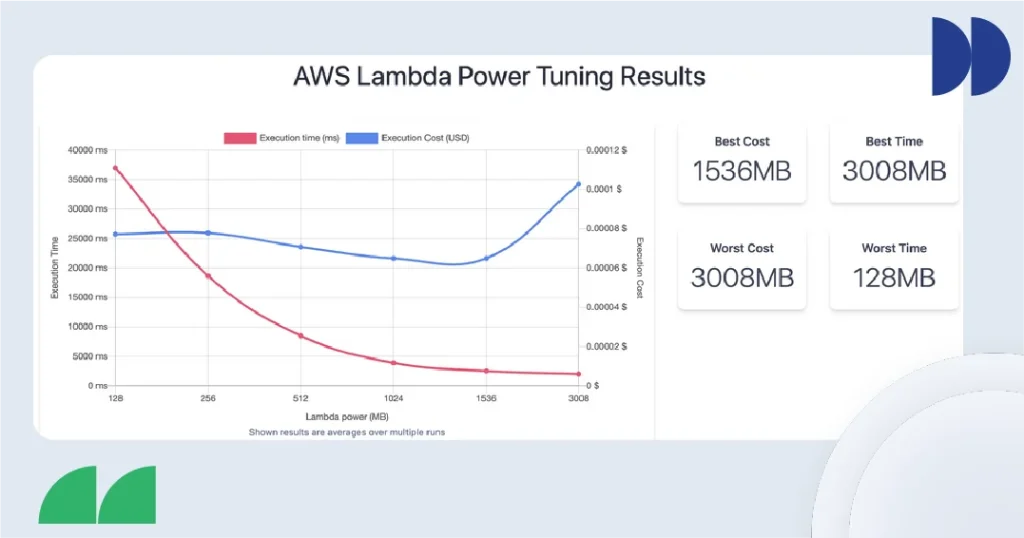

After you configure your preferences and run the tool, it generates a performance graph that summarizes execution time, memory usage, and total cost across the tested memory configurations, as illustrated below.

With the help of this data, you can select the best value of memory configuration that delivers acceptable performance without wasting resources. This step is important for minimizing the cost of your functions, particularly in high-throughput applications.

Managing AWS Lambda can get complex, especially when performance impacts user experience or budget. Let us help you navigate those challenges!

Other Tools and Techniques to Improve Performance Tuning

Here are some other quick tips you can immediately use to improve your performance:

1. Language Selection

When cold start latency is a concern, prefer interpreted languages like Node.js or Python over compiled languages such as Java or C#. While compiled languages generally offer better performance for subsequent invocations, they come with longer cold start times.

2. Java Start Time

If Java is required, be aware that it introduces higher cold start latency due to the JVM’s longer startup process. To mitigate this, consider using Provisioned Concurrency, which keeps functions initialized and ready to respond immediately.

3. Framework Choice

For Java workloads, prefer Spring Cloud Functions over the more heavyweight Spring Boot web framework, as the former is more optimized for serverless environments.

4. Network Configuration

Use the default Lambda network environment unless access to VPC resources with private IPs is necessary. Initializing Elastic Network Interfaces (ENIs) for VPC access can add a significant startup delay. However, AWS has made recent improvements that reduce ENI provisioning time in VPC-connected Lambda functions.

5. Dependency Management

Eliminate unnecessary dependencies to minimize your deployment package size and reduce cold start times. Include only those libraries and modules that are essential at runtime.

6. Variable Initialization

Use global/static variables or singleton objects when possible. These persist for the lifetime of the execution environment, so they do not need to be re-initialized across invocations, improving performance for subsequent calls.

7. Database Connections

Establish database connections at the global scope to enable connection reuse across invocations. Additionally, consider using Amazon RDS Proxy, which improves scalability and helps manage database connections more efficiently, especially in high-concurrency scenarios.

8. DNS Resolution

When running Lambda functions inside a VPC that interact with other AWS services, avoid operations that trigger DNS resolution, as this can introduce latency. For example, if accessing an RDS instance, launch it as non-publicly accessible to avoid unnecessary DNS lookups.

9. Dependency Injection (Java)

If using Java, opt for lightweight IoC containers like Dagger instead of full-featured frameworks like Spring for dependency injection. This keeps startup time minimal and improves cold start behavior.

10. JAR File Organization

Organize Java deployment packages by placing dependency JAR files in a separate /lib directory instead of bundling them with your function code. This helps speed up the unpacking and initialization process during deployment.

What Is the Best Way to Set Up Performance Monitoring for AWS Lambda?

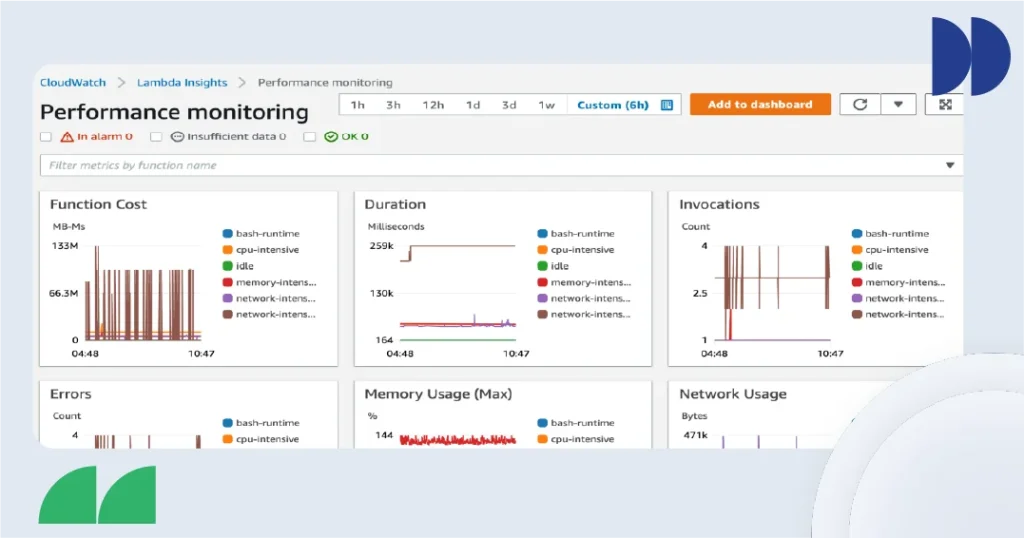

To improve AWS Lambda performance, it’s essential to implement performance monitoring. Gaining insight into function behavior during each invocation allows for precise tuning of configurations and helps maximize efficiency.

By default, Amazon CloudWatch captures and logs details for every Lambda execution through its LogGroup and LogStream structures. These logs enable the generation of dashboards displaying key metrics such as invocation counts, execution durations, error rates, and more. You can also configure custom alarms based on these metrics to proactively respond to anomalies.

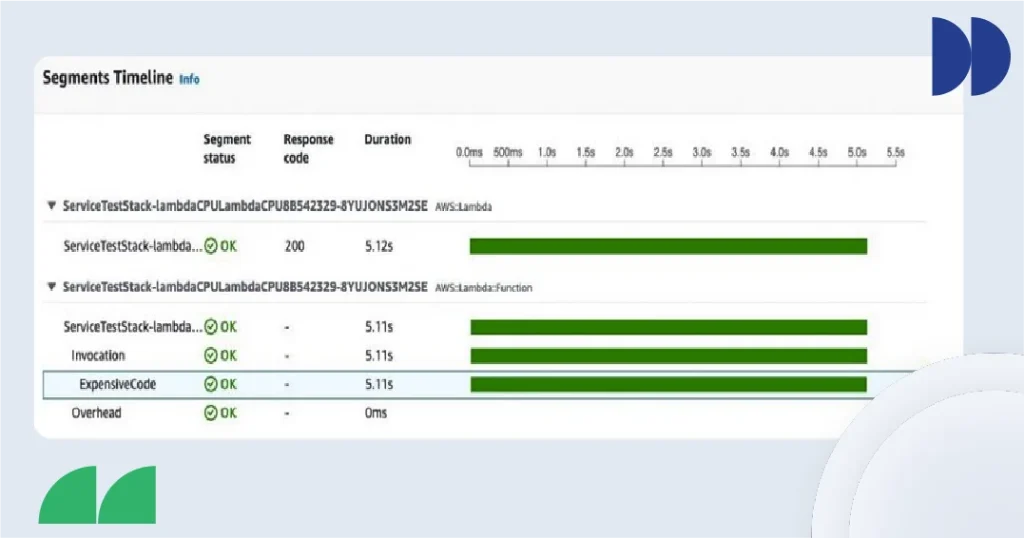

While CloudWatch provides visibility into Lambda itself, it doesn’t capture performance insights from downstream AWS services like DynamoDB or S3. For that, AWS X-Ray is a valuable tool. It offers end-to-end tracing of a Lambda function’s execution, including its interactions with other AWS services. This makes it easier to identify bottlenecks and debug issues across distributed systems.

However, integrating X-Ray into a Node.js deployment adds approximately 6MB to the function package size and can slightly increase compute time. Therefore, it’s best to enable X-Ray only when troubleshooting specific issues and remove it once debugging is complete to maintain optimal performance.

At first glance, these metrics provide valuable insight into how your function is behaving. But the real question is, how can this data be leveraged to drive tangible performance improvements?

The answer lies in analyzing Trace Maps. These maps offer a detailed view of a function’s execution lifecycle. Specifically, they contain profiling data for instrumented functions, helping pinpoint exactly which parts of the code are introducing performance bottlenecks.

Take this example: one segment of a particular invocation stands out due to its significantly higher runtime.

Upon investigation, that segment encapsulates a Fibonacci sequence calculation, an inherently computationally intensive, memory-bound operation. It’s important to note that such detailed trace data is only available if AWS X-Ray is enabled. If you’re not seeing these traces or segments, that’s likely the reason.

Armed with this visibility, you can begin experimenting with your function’s memory allocation. By adjusting the memory settings and reviewing the resulting traces, you can iteratively identify the ideal configuration that strikes a balance between optimal performance and cost-efficiency.

Frequently Asked Questions (FAQs)

What Are The Best Practices For AWS Lambda Tuning?

The best practices for AWS Lambda Performance Tuning include properly sizing memory to balance cost and performance, minimizing cold starts with provisioned concurrency, keeping code lightweight and efficient, using Lambda Layers for shared dependencies, and continuously monitoring with CloudWatch and X-Ray to identify issues.

How Can I Improve AWS Lambda Cold Start Times?

To improve AWS Lambda cold start times, use provisioned concurrency to keep functions warm, choose runtimes with faster startup (like Node.js or Python), minimize deployment package size, and avoid heavy initialization code inside the handler.

What Is The Best Way To Monitor AWS Lambda Performance?

The best way to monitor AWS Lambda performance is by using AWS CloudWatch for logs and metrics, combined with AWS X-Ray for detailed tracing and identifying bottlenecks across your serverless performance.

How Do I Scale AWS Lambda Functions Efficiently?

To scale AWS Lambda functions efficiently, design them to be stateless, use event-driven triggers, configure concurrency limits wisely, and leverage AWS’s automatic scaling while monitoring usage to adjust settings proactively.

How Can AWS Lambda Help With Performance And Cost Efficiency?

AWS Lambda boosts performance by automatically scaling with demand and reduces costs by charging only for actual compute time, eliminating the need to pay for idle resources.

Conclusion

Improving AWS Lambda performance plays an important role in building fast, reliable, and cost-effective serverless applications. By applying AWS Lambda best practices like right-sizing memory, reducing cold starts, and using tools for Lambda performance monitoring like CloudWatch and X-Ray, you can significantly increase function efficiency and AWS Lambda scalability.

As cloud-native architectures continue to grow, fine-tuning your Lambda functions ensures your applications remain responsive and ready to meet increasing tech demands.

Cloud engineering experts can improve AWS environments, including Lambda performance tuning, cost control, and architectural best practices. If you’re building from scratch or improving an existing system, we can help you achieve high-performing, scalable solutions fit for your business needs.

Cold starts, scaling limits, or memory issues slowing you down?

We can help you implement proven strategies to reduce execution time, control costs, and improve reliability

Share your thoughts about this blog!